Vocal FX Chains

About Vocal FX Chains

A very important use case for audio processing is making vocal performances more interesting by applying various effects.

Using our basic effects as building blocks we can make complex FX chains with full control over the parameters.

Such FX chains can be applied after making the recording, but the low latency capabilities of the SDK allows us to apply the effects real-time, while recording.

In this example we will create an app for voice recording over a beat with real-time and post-production FX chains for the vocals, and sharing capabilities for the end result.

Vocal FX Chains App

You can find the source code on the following link:

Vocal FX Chains App - iOS

You can find the source code on the following link:

Vocal FX Chains App - Android

The app has the following features:

- Vocal recording over a beat

- Ability to apply FX chains during recording

- Ability to replace FX chains after recording

- Customization of FX chain presets

- Sharing of the completed recording

It consists of the following screens:

- Recording: Vocal recording over a beat, FX chain enabling and selection

- FX Editing: Post-production FX chain selection and parametrization for the recording, and an export and share capability

We will implement two kinds of FX Chains, a Harmonizer Effect and a Radio Effect. More info on those below the screens.

This example uses the Superpowered Extension.

Why use the Superpowered Extension instead of Superpowered directly?

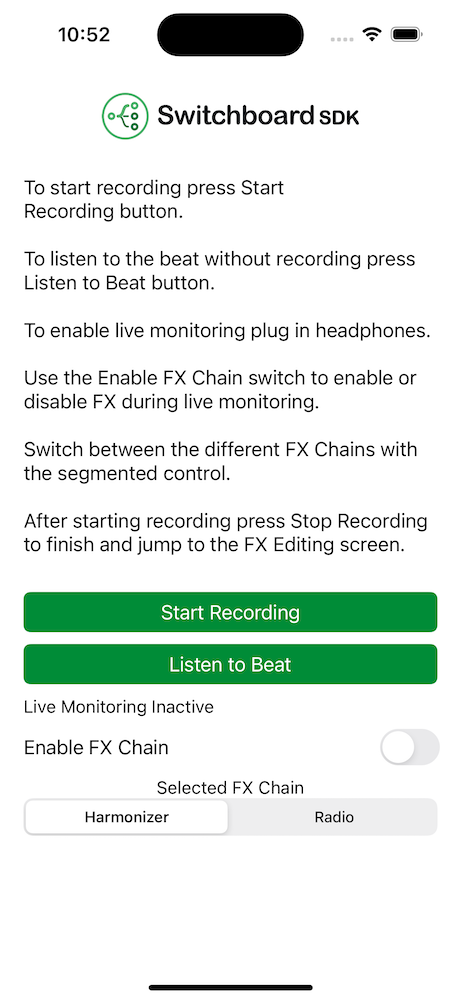

Recording

The recording screen consists of a recording start and stop button, and an FX chain selection control and enabling button.

After starting the recording the beat playback starts, which you can hear through the speaker. The audio system saves the clean vocal recording separately to be able to render with different FX chains in a later step.

If using headphones you are able to monitor your own voice real time with the selected FX chain applied.

Audio Graph

Our audio system for vocal recording has to be able to record a clean input, while on a separate branch applying the effects and mixing it with the beat track. The output of the mixer is routed to the speakers.

The audio graph for the Recording screen looks the following:

To run our FX chains we utilize a SubgraphProcessorNode in which we pass our FX audio graphs. This node is plugged in between the recorded voice player and the final mixer of our Karaoke App. SubgraphProcessorNode is capable of hot-plugging different audio graphs while running in real time, enabling us to switch between the different FX chains.

Code Example

- Swift

- Kotlin

import SwitchboardSDK

import SwitchboardAudioEffects

protocol RecordingAudioSystemDelegate {

func recordingAudioSystem(_: RecordingAudioSystem, isLiveMonitoringActive: Bool)

}

class RecordingAudioSystem: AudioSystem {

let inputSplitterNode = SBBusSplitterNode()

let inputRecorderNode = SBRecorderNode()

let fxChainNode = SBSubgraphProcessorNode()

let busSelectNode = SBBusSelectNode()

let muteNode = SBMuteNode()

let beatPlayerNode = SBAudioPlayerNode()

let mixerNode = SBMixerNode()

let harmonizer = HarmonizerEffect()

let radio = RadioEffect()

var applyFXChain: Bool = Config.applyFXChain {

didSet {

busSelectNode.selectedBus = applyFXChain ? 0 : 1;

Config.applyFXChain = applyFXChain

}

}

var delegate: RecordingAudioSystemDelegate?

override init() {

super.init()

audioEngine.delegate = self

busSelectNode.selectedBus = applyFXChain ? 0 : 1;

selectFXChain()

audioGraph.addNode(inputSplitterNode)

audioGraph.addNode(inputRecorderNode)

audioGraph.addNode(fxChainNode)

audioGraph.addNode(busSelectNode)

audioGraph.addNode(muteNode)

audioGraph.addNode(beatPlayerNode)

audioGraph.addNode(mixerNode)

audioGraph.connect(audioGraph.inputNode, to: inputSplitterNode)

audioGraph.connect(inputSplitterNode, to: fxChainNode)

audioGraph.connect(fxChainNode, to: busSelectNode)

audioGraph.connect(inputSplitterNode, to: busSelectNode)

audioGraph.connect(inputSplitterNode, to: inputRecorderNode)

audioGraph.connect(busSelectNode, to: muteNode)

audioGraph.connect(beatPlayerNode, to: mixerNode)

audioGraph.connect(muteNode, to: mixerNode)

audioGraph.connect(mixerNode, to: audioGraph.outputNode)

beatPlayerNode.load(Bundle.main.url(forResource: "trap130bpm", withExtension: "mp3")!.absoluteString)

beatPlayerNode.isLoopingEnabled = true

muteNode.isMuted = isSpeaker()

audioEngine.voiceProcessingEnabled = false

audioEngine.microphoneEnabled = true

}

func selectFXChain() {

switch Config.selectedFXChainIndex {

case 0:

fxChainNode.audioGraph = harmonizer.audioGraph

default:

fxChainNode.audioGraph = radio.audioGraph

}

}

func startRecording() {

inputRecorderNode.start()

}

func stopRecording() {

inputRecorderNode.stop(Config.cleanVocalRecordingFilePath, withFormat: Config.recordingFormat)

}

var isRecording: Bool {

return inputRecorderNode.isRecording

}

func startBeat() {

beatPlayerNode.play()

}

func stopBeat() {

beatPlayerNode.stop()

}

var isBeatPlaying: Bool {

return beatPlayerNode.isPlaying

}

func isSpeaker() -> Bool {

return audioEngine.currentOutputRoute == .builtInSpeaker || audioEngine.currentOutputRoute == .builtInReceiver

}

}

extension RecordingAudioSystem: SBAudioEngineDelegate {

func audioEngine(_: SBAudioEngine, inputRouteChanged currentInputRoute: AVAudioSession.Port) {}

func audioEngine(_: SBAudioEngine, outputRouteChanged currentOutputRoute: AVAudioSession.Port) {

muteNode.isMuted = isSpeaker()

delegate?.recordingAudioSystem(self, isLiveMonitoringActive: !isSpeaker())

}

}

import com.synervoz.switchboard.sdk.audiographnodes.AudioPlayerNode

import com.synervoz.switchboard.sdk.audiographnodes.BusSelectNode

import com.synervoz.switchboard.sdk.audiographnodes.BusSplitterNode

import com.synervoz.switchboard.sdk.audiographnodes.MixerNode

import com.synervoz.switchboard.sdk.audiographnodes.MuteNode

import com.synervoz.switchboard.sdk.audiographnodes.RecorderNode

import com.synervoz.switchboard.sdk.audiographnodes.SubgraphProcessorNode

import com.synervoz.vocalfxchainsapp.Config

class RecordingAudioSystem : AudioSystem() {

val inputSplitterNode = BusSplitterNode()

val inputRecorderNode = RecorderNode()

val fxChainNode = SubgraphProcessorNode()

val busSelectNode = BusSelectNode()

val muteNode = MuteNode()

val beatPlayerNode = AudioPlayerNode()

val mixerNode = MixerNode()

val harmonizer = HarmonizerEffect()

val radio = RadioEffect()

var applyFXChain: Boolean = Config.applyFXChain

set(value) {

field = value

busSelectNode.selectedBus = if (value) 0 else 1

Config.applyFXChain = value

}

init {

busSelectNode.selectedBus = if (applyFXChain) 0 else 1

selectFXChain()

audioGraph.addNode(inputSplitterNode)

audioGraph.addNode(inputRecorderNode)

audioGraph.addNode(fxChainNode)

audioGraph.addNode(busSelectNode)

audioGraph.addNode(muteNode)

audioGraph.addNode(beatPlayerNode)

audioGraph.addNode(mixerNode)

audioGraph.connect(audioGraph.inputNode, inputSplitterNode)

audioGraph.connect(inputSplitterNode, fxChainNode)

audioGraph.connect(fxChainNode, busSelectNode)

audioGraph.connect(inputSplitterNode, busSelectNode)

audioGraph.connect(inputSplitterNode, inputRecorderNode)

audioGraph.connect(busSelectNode, muteNode)

audioGraph.connect(beatPlayerNode, mixerNode)

audioGraph.connect(muteNode, mixerNode)

audioGraph.connect(mixerNode, audioGraph.outputNode)

beatPlayerNode.isLoopingEnabled = true

muteNode.isMuted = true

audioEngine.enableMicrophone(true)

}

fun enableLiveMonitoring(enable: Boolean) {

muteNode.isMuted = !enable

}

fun selectFXChain() {

when (Config.selectedFXChainIndex) {

0 -> fxChainNode.setAudioGraph(harmonizer.audioGraph)

else -> fxChainNode.setAudioGraph(radio.audioGraph)

}

}

fun startRecording() {

inputRecorderNode.start()

}

fun stopRecording() {

val path = Config.cleanVocalRecordingFilePath

inputRecorderNode.stop(path, Config.recordingFormat)

}

val isRecording: Boolean

get() = inputRecorderNode.isRecording

fun startBeat() {

beatPlayerNode.play()

}

fun stopBeat() {

beatPlayerNode.stop()

}

val isBeatPlaying: Boolean

get() = beatPlayerNode.isPlaying

}

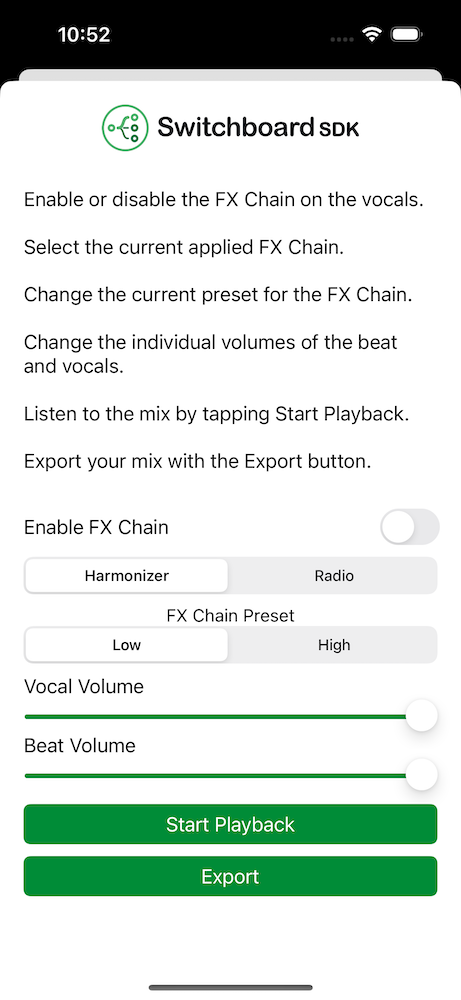

FX Editing

The FX editing screen consists of an FX chain selection control and the controls for the various FX parameters.

When selecting a new FX chain the previously saved clean vocals are rendered to the output with the new FX chain so you can hear the changes instantly.

The sharing feature allows you to select whether you want to save or share your completed recording to the usual channels.

The audio graph for the FX Editing screen looks the following:

Audio Graph

The same audio graph will be used with the Offline Graph Renderer to render the final mix to an output file which can be shared.

Code Example

- Swift

- Kotlin

import SwitchboardSDK

class FXEditingAudioSystem: AudioSystem {

let beatPlayerNode = SBAudioPlayerNode()

let beatGainNode = SBGainNode()

let vocalPlayerNode = SBAudioPlayerNode()

let vocalGainNode = SBGainNode()

let vocalPlayerSplitterNode = SBBusSplitterNode()

let fxChainNode = SBSubgraphProcessorNode()

let busSelectNode = SBBusSelectNode()

let mixerNode = SBMixerNode()

let offlineGraphRenderer = SBOfflineGraphRenderer()

let harmonizer = HarmonizerEffect()

let radio = RadioEffect()

var applyFXChain: Bool = Config.applyFXChain {

didSet {

busSelectNode.selectedBus = applyFXChain ? 0 : 1;

Config.applyFXChain = applyFXChain

}

}

override init() {

super.init()

busSelectNode.selectedBus = applyFXChain ? 0 : 1;

selectFXChain()

selectFXChainPreset()

audioGraph.addNode(beatPlayerNode)

audioGraph.addNode(beatGainNode)

audioGraph.addNode(vocalPlayerNode)

audioGraph.addNode(vocalGainNode)

audioGraph.addNode(vocalPlayerSplitterNode)

audioGraph.addNode(fxChainNode)

audioGraph.addNode(busSelectNode)

audioGraph.addNode(mixerNode)

audioGraph.connect(beatPlayerNode, to: beatGainNode)

audioGraph.connect(beatGainNode, to: mixerNode)

audioGraph.connect(vocalPlayerNode, to: vocalGainNode)

audioGraph.connect(vocalGainNode, to: vocalPlayerSplitterNode)

audioGraph.connect(vocalPlayerSplitterNode, to: fxChainNode)

audioGraph.connect(fxChainNode, to: busSelectNode)

audioGraph.connect(vocalPlayerSplitterNode, to: busSelectNode)

audioGraph.connect(busSelectNode, to: mixerNode)

audioGraph.connect(mixerNode, to: audioGraph.outputNode)

beatPlayerNode.load(Bundle.main.url(forResource: "trap130bpm", withExtension: "mp3")!.absoluteString)

beatPlayerNode.isLoopingEnabled = true

beatPlayerNode.duration()

vocalPlayerNode.load(Config.cleanVocalRecordingFilePath, withFormat: Config.recordingFormat)

vocalPlayerNode.isLoopingEnabled = true

beatPlayerNode.endPosition = vocalPlayerNode.duration()

audioEngine.voiceProcessingEnabled = false

audioEngine.microphoneEnabled = false

}

func selectFXChain() {

switch Config.selectedFXChainIndex {

case 0:

fxChainNode.audioGraph = harmonizer.audioGraph

default:

fxChainNode.audioGraph = radio.audioGraph

}

}

func selectFXChainPreset() {

switch Config.selectedFXChainIndex {

case 0:

switch Config.harmonizerPreset {

case 0:

harmonizer.setLowPreset()

default:

harmonizer.setHighPreset()

}

default:

switch Config.radioPreset {

case 0:

radio.setLowPreset()

default:

radio.setHighPreset()

}

}

}

func startPlayback() {

beatPlayerNode.play()

vocalPlayerNode.play()

}

func stopPlayback() {

beatPlayerNode.stop()

vocalPlayerNode.stop()

}

var isPlaying: Bool {

return beatPlayerNode.isPlaying

}

func renderMix() {

let sampleRate = max(vocalPlayerNode.sourceSampleRate, beatPlayerNode.sourceSampleRate)

vocalPlayerNode.position = 0.0

beatPlayerNode.position = 0.0

offlineGraphRenderer.sampleRate = sampleRate

offlineGraphRenderer.maxNumberOfSecondsToRender = vocalPlayerNode.duration()

startPlayback()

offlineGraphRenderer.processGraph(audioGraph, withOutputFile: Config.finalMixRecordingFile, withOutputFileCodec: .wav)

stopPlayback()

}

}

import com.synervoz.switchboard.sdk.audiograph.OfflineGraphRenderer

import com.synervoz.switchboard.sdk.audiographnodes.AudioPlayerNode

import com.synervoz.switchboard.sdk.audiographnodes.BusSelectNode

import com.synervoz.switchboard.sdk.audiographnodes.BusSplitterNode

import com.synervoz.switchboard.sdk.audiographnodes.GainNode

import com.synervoz.switchboard.sdk.audiographnodes.MixerNode

import com.synervoz.switchboard.sdk.audiographnodes.SubgraphProcessorNode

import com.synervoz.vocalfxchainsapp.Config

import kotlin.math.max

class FXEditingAudioSystem : AudioSystem() {

val beatPlayerNode = AudioPlayerNode()

val beatGainNode = GainNode()

val vocalPlayerNode = AudioPlayerNode()

val vocalGainNode = GainNode()

val vocalPlayerSplitterNode = BusSplitterNode()

val fxChainNode = SubgraphProcessorNode()

val busSelectNode = BusSelectNode()

val mixerNode = MixerNode()

val offlineGraphRenderer = OfflineGraphRenderer()

val harmonizer = HarmonizerEffect()

val radio = RadioEffect()

var applyFXChain: Boolean = Config.applyFXChain

set(value) {

field = value

busSelectNode.selectedBus = if (value) 0 else 1

Config.applyFXChain = value

}

init {

busSelectNode.selectedBus = if (applyFXChain) 0 else 1

selectFXChain()

selectFXChainPreset()

audioGraph.addNode(beatPlayerNode)

audioGraph.addNode(beatGainNode)

audioGraph.addNode(vocalPlayerNode)

audioGraph.addNode(vocalGainNode)

audioGraph.addNode(vocalPlayerSplitterNode)

audioGraph.addNode(fxChainNode)

audioGraph.addNode(busSelectNode)

audioGraph.addNode(mixerNode)

audioGraph.connect(beatPlayerNode, beatGainNode)

audioGraph.connect(beatGainNode, mixerNode)

audioGraph.connect(vocalPlayerNode, vocalGainNode)

audioGraph.connect(vocalGainNode, vocalPlayerSplitterNode)

audioGraph.connect(vocalPlayerSplitterNode, fxChainNode)

audioGraph.connect(fxChainNode, busSelectNode)

audioGraph.connect(vocalPlayerSplitterNode, busSelectNode)

audioGraph.connect(busSelectNode, mixerNode)

audioGraph.connect(mixerNode, audioGraph.outputNode)

beatPlayerNode.isLoopingEnabled = true

vocalPlayerNode.isLoopingEnabled = true

audioEngine.enableMicrophone(false)

}

fun selectFXChain() {

when (Config.selectedFXChainIndex) {

0 -> fxChainNode.setAudioGraph(harmonizer.audioGraph)

else -> fxChainNode.setAudioGraph(radio.audioGraph)

}

}

fun selectFXChainPreset() {

when (Config.selectedFXChainIndex) {

0 -> {

when (Config.harmonizerPreset) {

0 -> harmonizer.setLowPreset()

else -> harmonizer.setHighPreset()

}

}

else -> {

when (Config.radioPreset) {

0 -> radio.setLowPreset()

else -> radio.setHighPreset()

}

}

}

}

fun startPlayback() {

beatPlayerNode.play()

vocalPlayerNode.play()

}

fun stopPlayback() {

beatPlayerNode.stop()

vocalPlayerNode.stop()

}

val isPlaying: Boolean

get() = beatPlayerNode.isPlaying

fun renderMix() {

val sampleRate = max(vocalPlayerNode.getSourceSampleRate(), beatPlayerNode.getSourceSampleRate())

vocalPlayerNode.position = 0.0

beatPlayerNode.position = 0.0

offlineGraphRenderer.setSampleRate(sampleRate)

offlineGraphRenderer.setMaxNumberOfSecondsToRender(vocalPlayerNode.getDuration())

startPlayback()

offlineGraphRenderer.processGraph(audioGraph, Config.finalMixRecordingFile, Config.recordingFormat)

stopPlayback()

}

}

FX Chains

We implement our FX chains as separate audio graphs. These graphs can be run by passing it to a SubgraphProcessorNode.

The different effect nodes can be chained inside this subgraph.

Harmonizer Effect

This effect utilizes an automatic vocal pitch correction node to correct the pitch. The signal is split after the automatic vocal pitch correction pitch shifted by 4 notes in both directions to emulate harmonizing. It adds some reverb after mixing the pitch shifted outputs with the pitch shifted signal.

Audio Graph

Code Example

- Swift

- Kotlin

import SwitchboardSDK

import SwitchboardSuperpowered

class HarmonizerEffect: FXChain {

let avpcNode = SBAutomaticVocalPitchCorrectionNode()

let busSplitterNode = SBBusSplitterNode()

let lowPitchShiftNode = SBPitchShiftNode()

let lowPitchShiftGainNode = SBGainNode()

let highPitchShiftNode = SBPitchShiftNode()

let highPitchShiftGainNode = SBGainNode()

let mixerNode = SBMixerNode()

let reverbNode = SBReverbNode()

func setLowPreset() {

avpcNode.isEnabled = true

avpcNode.speed = MEDIUM

avpcNode.range = WIDE

avpcNode.scale = CMAJOR

lowPitchShiftNode.isEnabled = true

lowPitchShiftNode.pitchShiftCents = -400

lowPitchShiftGainNode.gain = 0.4

highPitchShiftNode.isEnabled = true

highPitchShiftNode.pitchShiftCents = 400

highPitchShiftGainNode.gain = 0.4

reverbNode.isEnabled = true

reverbNode.mix = 0.008

reverbNode.width = 0.7

reverbNode.damp = 0.5

reverbNode.roomSize = 0.5

reverbNode.predelayMs = 10.0

}

func setHighPreset() {

avpcNode.isEnabled = true

avpcNode.speed = EXTREME

avpcNode.range = WIDE

avpcNode.scale = CMAJOR

lowPitchShiftNode.isEnabled = true

lowPitchShiftNode.pitchShiftCents = -400

lowPitchShiftGainNode.gain = 1.0

highPitchShiftNode.isEnabled = true

highPitchShiftNode.pitchShiftCents = 400

highPitchShiftGainNode.gain = 1.0

reverbNode.isEnabled = true

reverbNode.mix = 0.015

reverbNode.width = 0.7

reverbNode.damp = 0.5

reverbNode.roomSize = 0.75

reverbNode.predelayMs = 10.0

}

override init() {

super.init()

switch Config.harmonizerPreset {

case 0:

setLowPreset()

default:

setHighPreset()

}

audioGraph.addNode(avpcNode)

audioGraph.addNode(busSplitterNode)

audioGraph.addNode(lowPitchShiftNode)

audioGraph.addNode(lowPitchShiftGainNode)

audioGraph.addNode(highPitchShiftNode)

audioGraph.addNode(highPitchShiftGainNode)

audioGraph.addNode(mixerNode)

audioGraph.addNode(reverbNode)

audioGraph.connect(audioGraph.inputNode, to: avpcNode)

audioGraph.connect(avpcNode, to: busSplitterNode)

audioGraph.connect(busSplitterNode, to: lowPitchShiftNode)

audioGraph.connect(busSplitterNode, to: highPitchShiftNode)

audioGraph.connect(busSplitterNode, to: mixerNode)

audioGraph.connect(lowPitchShiftNode, to: lowPitchShiftGainNode)

audioGraph.connect(lowPitchShiftGainNode, to: mixerNode)

audioGraph.connect(highPitchShiftNode, to: highPitchShiftGainNode)

audioGraph.connect(highPitchShiftGainNode, to: mixerNode)

audioGraph.connect(mixerNode, to: reverbNode)

audioGraph.connect(reverbNode, to: audioGraph.outputNode)

audioGraph.start()

}

}

import com.synervoz.switchboard.sdk.audiographnodes.BusSplitterNode

import com.synervoz.switchboard.sdk.audiographnodes.GainNode

import com.synervoz.switchboard.sdk.audiographnodes.MixerNode

import com.synervoz.switchboardsuperpowered.audiographnodes.AutomaticVocalPitchCorrectionNode

import com.synervoz.switchboardsuperpowered.audiographnodes.PitchShiftNode

import com.synervoz.switchboardsuperpowered.audiographnodes.ReverbNode

import com.synervoz.vocalfxchainsapp.Config

class HarmonizerEffect : FXChain() {

private val avpcNode = AutomaticVocalPitchCorrectionNode()

private val busSplitterNode = BusSplitterNode()

private val lowPitchShiftNode = PitchShiftNode()

private val lowPitchShiftGainNode = GainNode()

private val highPitchShiftNode = PitchShiftNode()

private val highPitchShiftGainNode = GainNode()

private val mixerNode = MixerNode()

private val reverbNode = ReverbNode()

fun setLowPreset() {

avpcNode.isEnabled = true

avpcNode.speed = AutomaticVocalPitchCorrectionNode.TunerSpeed.MEDIUM

avpcNode.range = AutomaticVocalPitchCorrectionNode.TunerRange.WIDE

avpcNode.scale = AutomaticVocalPitchCorrectionNode.TunerScale.CMAJOR

lowPitchShiftNode.isEnabled = true

lowPitchShiftNode.pitchShiftCents = -400

lowPitchShiftGainNode.gain = 0.4f

highPitchShiftNode.isEnabled = true

highPitchShiftNode.pitchShiftCents = 400

highPitchShiftGainNode.gain = 0.4f

reverbNode.isEnabled = true

reverbNode.mix = 0.008f

reverbNode.width = 0.7f

reverbNode.damp = 0.5f

reverbNode.roomSize = 0.5f

reverbNode.predelayMs = 10.0f

}

fun setHighPreset() {

avpcNode.isEnabled = true

avpcNode.speed = AutomaticVocalPitchCorrectionNode.TunerSpeed.EXTREME

avpcNode.range = AutomaticVocalPitchCorrectionNode.TunerRange.WIDE

avpcNode.scale = AutomaticVocalPitchCorrectionNode.TunerScale.CMAJOR

lowPitchShiftNode.isEnabled = true

lowPitchShiftNode.pitchShiftCents = -400

lowPitchShiftGainNode.gain = 1.0f

highPitchShiftNode.isEnabled = true

highPitchShiftNode.pitchShiftCents = 400

highPitchShiftGainNode.gain = 1.0f

reverbNode.isEnabled = true

reverbNode.mix = 0.015f

reverbNode.width = 0.7f

reverbNode.damp = 0.5f

reverbNode.roomSize = 0.75f

reverbNode.predelayMs = 10.0f

}

init {

when (Config.harmonizerPreset) {

0 -> setLowPreset()

else -> setHighPreset()

}

audioGraph.addNode(avpcNode)

audioGraph.addNode(busSplitterNode)

audioGraph.addNode(lowPitchShiftNode)

audioGraph.addNode(lowPitchShiftGainNode)

audioGraph.addNode(highPitchShiftNode)

audioGraph.addNode(highPitchShiftGainNode)

audioGraph.addNode(mixerNode)

audioGraph.addNode(reverbNode)

audioGraph.connect(audioGraph.inputNode, avpcNode)

audioGraph.connect(avpcNode, busSplitterNode)

audioGraph.connect(busSplitterNode, lowPitchShiftNode)

audioGraph.connect(busSplitterNode, highPitchShiftNode)

audioGraph.connect(busSplitterNode, mixerNode)

audioGraph.connect(lowPitchShiftNode, lowPitchShiftGainNode)

audioGraph.connect(lowPitchShiftGainNode, mixerNode)

audioGraph.connect(highPitchShiftNode, highPitchShiftGainNode)

audioGraph.connect(highPitchShiftGainNode, mixerNode)

audioGraph.connect(mixerNode, reverbNode)

audioGraph.connect(reverbNode, audioGraph.outputNode)

audioGraph.start()

}

}

Radio Effect

This effect first bandpass filters the signal to only keep frequencies around the 3648 Hz value, discarding bass and treble, then applies some distortion to it. This emulates an analog radio-like sound. At the end some reverb is applied to make it a bit richer.

Audio Graph

Code Example

- Swift

- Kotlin

import SwitchboardSDK

import SwitchboardSuperpowered

class RadioEffect: FXChain {

let bandpassFilterNode = SBFilterNode()

let distortionNode = SBGuitarDistortionNode()

let reverbNode = SBReverbNode()

func setLowPreset() {

bandpassFilterNode.isEnabled = true

bandpassFilterNode.type = Bandlimited_Bandpass

bandpassFilterNode.frequency = 3648.0

bandpassFilterNode.octave = 0.7

reverbNode.isEnabled = true

reverbNode.mix = 0.008

reverbNode.width = 0.7

reverbNode.damp = 0.5

reverbNode.roomSize = 0.5

reverbNode.predelayMs = 10.0

}

func setHighPreset() {

bandpassFilterNode.isEnabled = true

bandpassFilterNode.type = Bandlimited_Bandpass

bandpassFilterNode.frequency = 3648.0

bandpassFilterNode.octave = 0.3

reverbNode.isEnabled = true

reverbNode.mix = 0.015

reverbNode.width = 0.7

reverbNode.damp = 0.5

reverbNode.roomSize = 0.75

reverbNode.predelayMs = 10.0

}

override init() {

super.init()

switch Config.radioPreset {

case 0:

setLowPreset()

default:

setHighPreset()

}

audioGraph.addNode(bandpassFilterNode)

audioGraph.addNode(distortionNode)

audioGraph.addNode(reverbNode)

audioGraph.connect(audioGraph.inputNode, to: bandpassFilterNode)

audioGraph.connect(bandpassFilterNode, to: distortionNode)

audioGraph.connect(distortionNode, to: reverbNode)

audioGraph.connect(reverbNode, to: audioGraph.outputNode)

audioGraph.start()

}

}

import com.synervoz.switchboardsuperpowered.audiographnodes.FilterNode

import com.synervoz.switchboardsuperpowered.audiographnodes.GuitarDistortionNode

import com.synervoz.switchboardsuperpowered.audiographnodes.ReverbNode

import com.synervoz.vocalfxchainsapp.Config

class RadioEffect : FXChain() {

private val bandpassFilterNode = FilterNode()

private val distortionNode = GuitarDistortionNode()

private val reverbNode = ReverbNode()

fun setLowPreset() {

bandpassFilterNode.isEnabled = true

bandpassFilterNode.filterType = FilterNode.FilterType.Bandlimited_Bandpass

bandpassFilterNode.frequency = 3648.0f

bandpassFilterNode.octave = 0.7f

reverbNode.isEnabled = true

reverbNode.mix = 0.008f

reverbNode.width = 0.7f

reverbNode.damp = 0.5f

reverbNode.roomSize = 0.5f

reverbNode.predelayMs = 10.0f

}

fun setHighPreset() {

bandpassFilterNode.isEnabled = true

bandpassFilterNode.filterType = FilterNode.FilterType.Bandlimited_Bandpass

bandpassFilterNode.frequency = 3648.0f

bandpassFilterNode.octave = 0.3f

reverbNode.isEnabled = true

reverbNode.mix = 0.015f

reverbNode.width = 0.7f

reverbNode.damp = 0.5f

reverbNode.roomSize = 0.75f

reverbNode.predelayMs = 10.0f

}

init {

when (Config.radioPreset) {

0 -> setLowPreset()

else -> setHighPreset()

}

audioGraph.addNode(bandpassFilterNode)

audioGraph.addNode(distortionNode)

audioGraph.addNode(reverbNode)

audioGraph.connect(audioGraph.inputNode, bandpassFilterNode)

audioGraph.connect(bandpassFilterNode, distortionNode)

audioGraph.connect(distortionNode, reverbNode)

audioGraph.connect(reverbNode, audioGraph.outputNode)

audioGraph.start()

}

}

You can find the source code on the following link:

Vocal FX Chains App - iOS

You can find the source code on the following link:

Vocal FX Chains App - Android