Online Radio App

About Online Radio

Online radio apps have revolutionized the way we listen to music and stay connected to our favorite radio stations.

These apps provide a seamless and convenient listening experience, allowing us to discover new music, stay updated with the latest news and events.

In this example we will create an app for real-time online radio experience over the internet, with the ability to broadcast voice, music and interesting sound effects to multiple people in the same virtual channel. The SDK allows you to easily integrate your app with the most common audio communication standards and providers.

We will combine what we learned in the Mixing and Music Ducking example.

Online Radio App

You can find the source code on the following link:

Online Radio App - iOS

You can find the source code on the following link:

Online Radio App - Android

The app has the following features:

- Broadcasting audio as a host

- Listening to the host in a channel

- Ability to join arbitrarily-named channels

- Ability to broadcast music in a channel as a host

- Ability to broadcast sound effects in a channel as a host

It consists of the following screens:

- Role Selection: Select your role in the online radio app: host or listener

- Host: Broadcast your voice, music and sound effects in a channel with ducking applied to the audio

- Listener: Listen to the host in a channel

This example uses the Agora Extension.

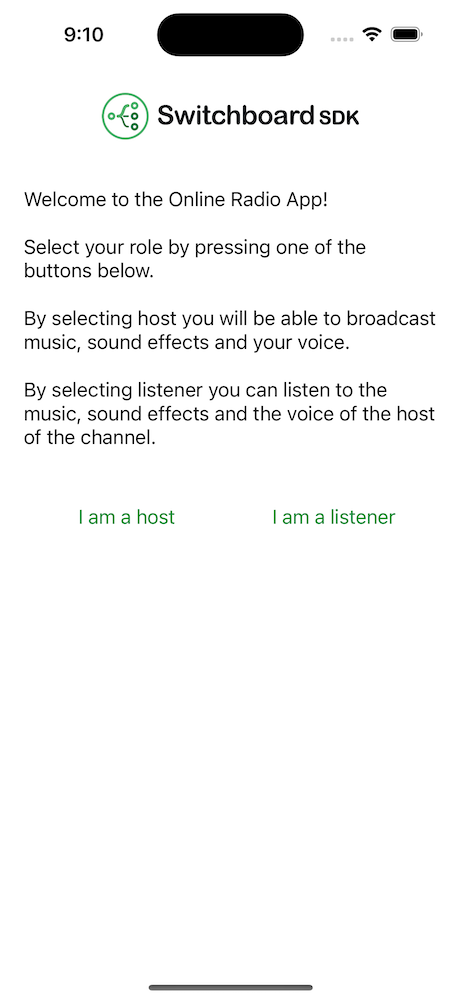

Role Selection

The role selection screen consists of two buttons for role selection.

Selecting I am a host takes you to the host screen where you can broadcast audio.

Selecting I am a listener takes you to the listener screen where you can listen to a host.

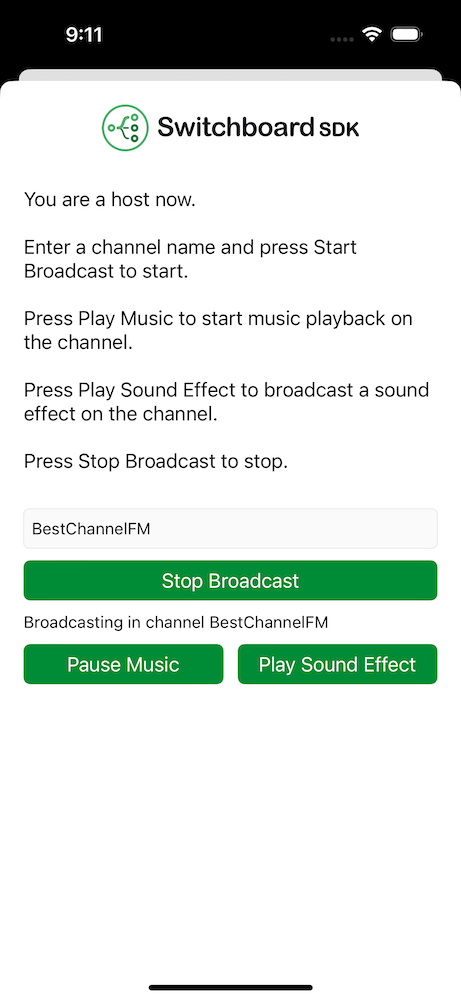

Host

The Host screen consists of a channel name input field, a broadcast start button and play music and play sound effect buttons.

To be able to start broadcasting a channel name has to be entered. After starting the broadcast audio is broadcasted to the remote listeners in the same channel.

Pressing the play sound effect button plays it locally while also transmitting it through the room to the other users.

If you talk, the music and sound effects are ducked and your voice can be heard better by the listeners.

Audio Graph

The audio graph for the Host screen looks the following:

The host's microphone input is transmitted into the channel.

We have two separate players for the music and the sound effect. The Music Player and the Effect Player has to be transmitted in the channel as well.

The Ducking Node has two different input types. The first one is the audio which needs to be ducked, while the rest are the control audio which indicates when the audio needs to be ducked. The control audio in this case is the host's microphone input.

The ducked audio is the mix of the Music and Effect player as we want to lower both the Music and the Effects when the host is speaking.

Code Example

- Swift

- Kotlin

import SwitchboardSDK

import SwitchboardAgora

class HostAudioSystem {

let audioEngine = SBAudioEngine()

let audioGraph = SBAudioGraph()

let agoraResampledSinkNode = SBResampledSinkNode()

let musicPlayerNode = SBAudioPlayerNode()

let effectsPlayerNode = SBAudioPlayerNode()

let playerMixerNode = SBMixerNode()

let inputSplitterNode = SBBusSplitterNode()

let inputMultiChannelToMonoNode = SBMultiChannelToMonoNode()

let musicDuckingNode = SBMusicDuckingNode()

let duckingSplitterNode = SBBusSplitterNode()

let agoraOutputMixerNode = SBMixerNode()

let multiChannelToMonoNode = SBMultiChannelToMonoNode()

var isPlaying: Bool {

musicPlayerNode.isPlaying

}

init(roomManager: RoomManager) {

audioEngine.microphoneEnabled = true

audioEngine.voiceProcessingEnabled = true

agoraResampledSinkNode.sinkNode = roomManager.sinkNode

agoraResampledSinkNode.internalSampleRate = roomManager.audioBus.getSampleRate()

let music = Bundle.main.url(forResource: "EMH-My_Lover", withExtension: "mp3")!

let effect = Bundle.main.url(forResource: "airhorn", withExtension: "mp3")!

musicPlayerNode.isLoopingEnabled = true

musicPlayerNode.load(music.absoluteString, withFormat: .apple)

effectsPlayerNode.load(effect.absoluteString, withFormat: .apple)

audioGraph.addNode(agoraResampledSinkNode)

audioGraph.addNode(musicPlayerNode)

audioGraph.addNode(effectsPlayerNode)

audioGraph.addNode(playerMixerNode)

audioGraph.addNode(inputSplitterNode)

audioGraph.addNode(inputMultiChannelToMonoNode)

audioGraph.addNode(musicDuckingNode)

audioGraph.addNode(duckingSplitterNode)

audioGraph.addNode(agoraOutputMixerNode)

audioGraph.addNode(multiChannelToMonoNode)

audioGraph.connect(effectsPlayerNode, to: playerMixerNode)

audioGraph.connect(musicPlayerNode, to: playerMixerNode)

audioGraph.connect(playerMixerNode, to: musicDuckingNode)

audioGraph.connect(audioGraph.inputNode, to: inputSplitterNode)

audioGraph.connect(inputSplitterNode, to: inputMultiChannelToMonoNode)

audioGraph.connect(inputMultiChannelToMonoNode, to: musicDuckingNode)

audioGraph.connect(musicDuckingNode, to: duckingSplitterNode)

audioGraph.connect(inputSplitterNode, to: agoraOutputMixerNode)

audioGraph.connect(duckingSplitterNode, to: agoraOutputMixerNode)

audioGraph.connect(agoraOutputMixerNode, to: multiChannelToMonoNode)

audioGraph.connect(multiChannelToMonoNode, to: agoraResampledSinkNode)

audioGraph.connect(duckingSplitterNode, to: audioGraph.outputNode)

}

func start() {

audioEngine.start(audioGraph)

}

func stop() {

audioEngine.stop()

}

func playMusic() {

musicPlayerNode.play()

}

func pauseMusic() {

musicPlayerNode.pause()

}

func playSoundEffect() {

effectsPlayerNode.stop()

effectsPlayerNode.play()

}

}

import com.synervoz.switchboard.sdk.audioengine.AudioEngine

import com.synervoz.switchboard.sdk.audiograph.AudioGraph

import com.synervoz.switchboard.sdk.audiographnodes.AudioPlayerNode

import com.synervoz.switchboard.sdk.audiographnodes.BusSplitterNode

import com.synervoz.switchboard.sdk.audiographnodes.MixerNode

import com.synervoz.switchboard.sdk.audiographnodes.MultiChannelToMonoNode

import com.synervoz.switchboard.sdk.audiographnodes.MusicDuckingNode

import com.synervoz.switchboard.sdk.audiographnodes.ResampledSinkNode

import com.synervoz.switchboardagora.rooms.RoomManager

class HostAudioSystem(roomManager: RoomManager) {

val audioEngine = AudioEngine(enableInput = true)

val audioGraph = AudioGraph()

val agoraResampledSinkNode = ResampledSinkNode()

val musicPlayerNode = AudioPlayerNode()

val effectsPlayerNode = AudioPlayerNode()

val playerMixerNode = MixerNode()

val inputSplitterNode = BusSplitterNode()

val inputMultiChannelToMonoNode = MultiChannelToMonoNode()

val musicDuckingNode = MusicDuckingNode()

val duckingSplitterNode = BusSplitterNode()

val agoraOutputMixerNode = MixerNode()

val multiChannelToMonoNode = MultiChannelToMonoNode()

val isPlaying: Boolean

get() = musicPlayerNode.isPlaying

init {

agoraResampledSinkNode.setSinkNode(roomManager.sinkNode)

agoraResampledSinkNode.internalSampleRate = roomManager.getAudioBus().sampleRate

musicPlayerNode.isLoopingEnabled = true

audioGraph.addNode(agoraResampledSinkNode)

audioGraph.addNode(musicPlayerNode)

audioGraph.addNode(effectsPlayerNode)

audioGraph.addNode(playerMixerNode)

audioGraph.addNode(inputSplitterNode)

audioGraph.addNode(inputMultiChannelToMonoNode)

audioGraph.addNode(musicDuckingNode)

audioGraph.addNode(duckingSplitterNode)

audioGraph.addNode(agoraOutputMixerNode)

audioGraph.addNode(multiChannelToMonoNode)

audioGraph.connect(effectsPlayerNode, playerMixerNode)

audioGraph.connect(musicPlayerNode, playerMixerNode)

audioGraph.connect(playerMixerNode, musicDuckingNode)

audioGraph.connect(audioGraph.inputNode, inputSplitterNode)

audioGraph.connect(inputSplitterNode, inputMultiChannelToMonoNode)

audioGraph.connect(inputMultiChannelToMonoNode, musicDuckingNode)

audioGraph.connect(musicDuckingNode, duckingSplitterNode)

audioGraph.connect(inputSplitterNode, agoraOutputMixerNode)

audioGraph.connect(duckingSplitterNode, agoraOutputMixerNode)

audioGraph.connect(agoraOutputMixerNode, multiChannelToMonoNode)

audioGraph.connect(multiChannelToMonoNode, agoraResampledSinkNode)

audioGraph.connect(duckingSplitterNode, audioGraph.outputNode)

}

fun start() {

audioEngine.start(audioGraph)

}

fun stop() {

audioEngine.stop()

}

fun playMusic() {

musicPlayerNode.play()

}

fun pauseMusic() {

musicPlayerNode.pause()

}

fun playSoundEffect() {

effectsPlayerNode.stop()

effectsPlayerNode.play()

}

fun close() {

audioGraph.close()

audioEngine.close()

agoraResampledSinkNode.close()

musicPlayerNode.close()

effectsPlayerNode.close()

playerMixerNode.close()

inputSplitterNode.close()

inputMultiChannelToMonoNode.close()

musicDuckingNode.close()

duckingSplitterNode.close()

agoraOutputMixerNode.close()

multiChannelToMonoNode.close()

}

}

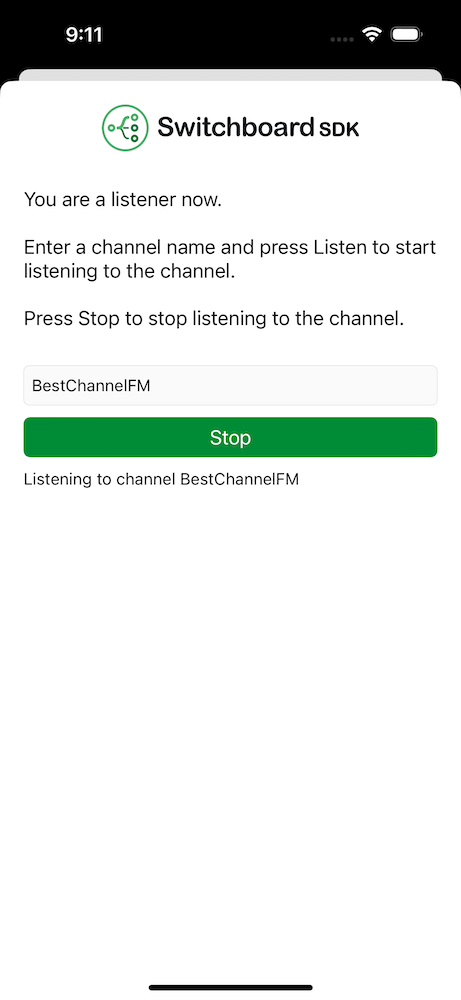

Listener

The Listener screen consists of a channel name input field and a listen button.

To be able to start listening a channel name has to be entered. After pressing the listen button audio can be heard coming from the host in the same channel.

Audio Graph

The audio graph for the Listener screen looks the following:

The source ResampledNode is needed to resample the signal from the device sample rate to the communication systems audio bus sample rate. The source node receives the remote audio and it is routed to the speaker output.

Code Example

- Swift

- Kotlin

import SwitchboardSDK

import SwitchboardAgora

class ListenerAudioSystem {

let audioEngine = SBAudioEngine()

let audioGraph = SBAudioGraph()

let agoraResampledSourceNode = SBResampledSourceNode()

let monoToMultiChannelNode = SBMonoToMultiChannelNode()

init(roomManager: RoomManager) {

agoraResampledSourceNode.sourceNode = roomManager.sourceNode

agoraResampledSourceNode.internalSampleRate = roomManager.audioBus.getSampleRate()

audioGraph.addNode(agoraResampledSourceNode)

audioGraph.addNode(monoToMultiChannelNode)

audioGraph.connect(agoraResampledSourceNode, to: monoToMultiChannelNode)

audioGraph.connect(monoToMultiChannelNode, to: audioGraph.outputNode)

}

func start() {

audioEngine.start(audioGraph)

}

func stop() {

audioEngine.stop()

}

}

import com.synervoz.switchboard.sdk.audioengine.AudioEngine

import com.synervoz.switchboard.sdk.audiograph.AudioGraph

import com.synervoz.switchboard.sdk.audiographnodes.MonoToMultiChannelNode

import com.synervoz.switchboard.sdk.audiographnodes.ResampledSourceNode

import com.synervoz.switchboardagora.rooms.RoomManager

class ListenerAudioSystem(roomManager: RoomManager) {

val audioEngine = AudioEngine(enableInput = true)

val audioGraph = AudioGraph()

val agoraResampledSourceNode = ResampledSourceNode()

val monoToMultiChannelNode = MonoToMultiChannelNode()

init {

agoraResampledSourceNode.setSourceNode(roomManager.sourceNode)

agoraResampledSourceNode.internalSampleRate = roomManager.getAudioBus().sampleRate

audioGraph.addNode(agoraResampledSourceNode)

audioGraph.addNode(monoToMultiChannelNode)

audioGraph.connect(agoraResampledSourceNode, monoToMultiChannelNode)

audioGraph.connect(monoToMultiChannelNode, audioGraph.outputNode)

}

fun start() {

audioEngine.start(audioGraph)

}

fun stop() {

audioEngine.stop()

}

fun close() {

audioGraph.close()

audioEngine.close()

agoraResampledSourceNode.close()

monoToMultiChannelNode.close()

}

}

You can find the source code on the following link:

Online Radio App - iOS

You can find the source code on the following link:

Online Radio App - Android